Software System

Navigation Algorithms

For localizing the autonomous underwater vehicles (AUVs), we used cameras, hydrophones, inertial measurement unit (IMU), doppler velocity log (DVL), and sonar. The DVL sensor is crucial for our AUVs to operate at target velocities and position themselves. We created algorithms that optimized the information collected by the DVL by devising a proportional integral derivative (PID) controller that summed the error between target and current velocity with the derivative of each error value itself. Implemented continuously, this PID controller outputs a deceleration curve as the motors reach the target velocity and the derivative of error is calculated to be an increasingly negative number. This controller smoothly accelerates and decelerates our AUV to a target velocity.

Additionally, we created an algorithm for utilizing the DVL feedback to increase the accuracy of our DVL-calculated AUV pose. Because the DVL measures velocity and time, it also measures displacement. By setting an anchor (or origin) point in the TRANSDEC pool, we are able to collect the AUV’s displacement over time, and add this to the anchor point to compute the AUV’s current position relative to the set origin. Furthermore, task locations in the pool are also measured in terms of displacement relative to the origin. With these two vectors computed, our algorithm computes the path and distance needed to reach various tasks in the TRANSDEC pool.

Mission Planning

Our mission planner has two parts: the decision maker and the mission scheduler. The decision maker works like a trade study, weighing different variables such as the AUV’s current status and location, time remaining, point value of the remaining missions, and probability of mission success. Missions that are not possible to be completed due to time constraints will be left out of the decision maker. After making its decision and logging it, the AUV updates the mission scheduler with the new mission order. Then the AUV will send a signal with the updated schedule to the other AUV. This way, both AUVs will be operating on the same mission schedule, and conflicts are avoided.

Computer Vision (CV)

In previous seasons of RoboSub and RobotX, we experimented with using Machine Learning, Vuforia, and conventional Machine Learning (OpenCV). In RobotX we supplemented our machine learning with Roboflow to classify images. We decided that using OpenCV would be the most effective method for image detection and classification as it takes significantly less processing power. Additionally, OpenCV allows for greater customizability compared to Machine Learning. Thus, we applied OpenCV to the gate, buoys, bins, path, and octagon missions with Vuforia for specific image recognition.

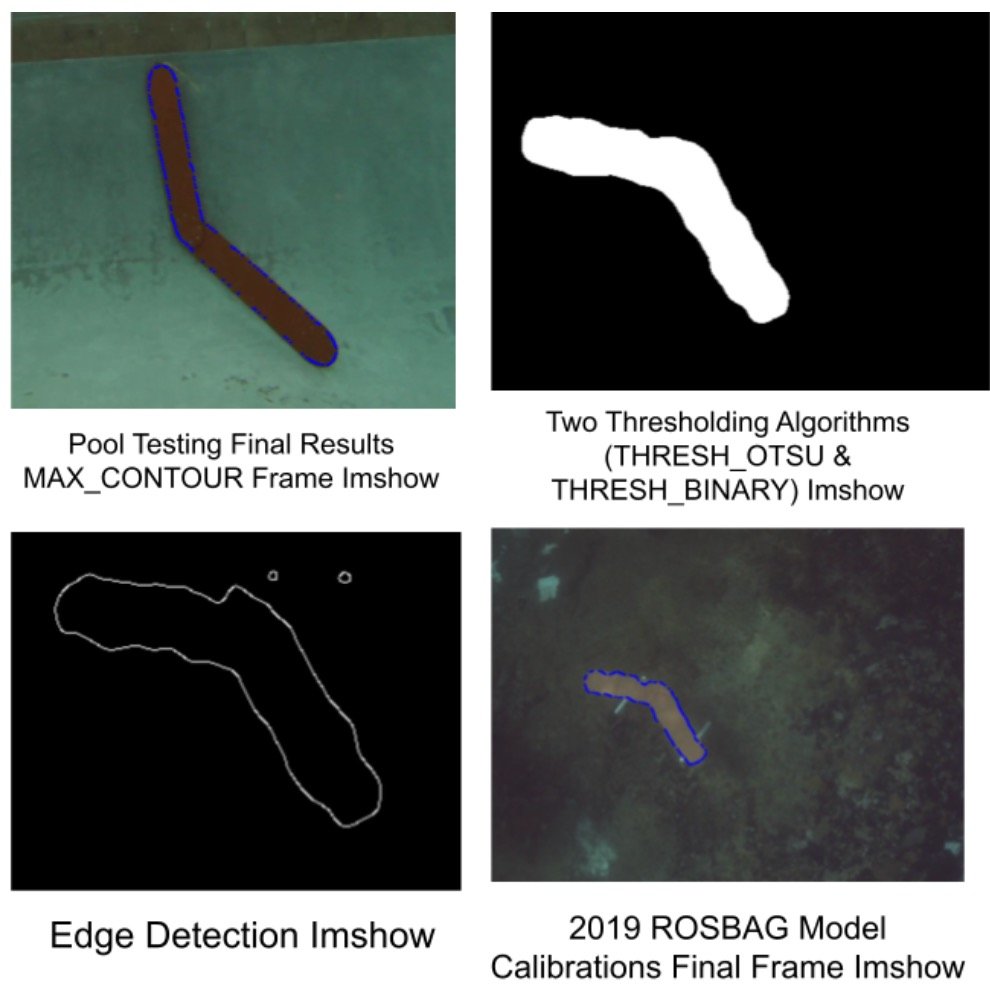

Our OpenCV code utilizes the following algorithms in order: color isolation, binary thresholding, erosion and dilation, area thresholding, and contour approximation. For the path mission, our OpenCV code consisted of a series of HSV color isolation, Gaussian Blurs, and skeletonization algorithms to remove noise from dirt which created irregularities, and max contouring algorithms for reasonable results. We used a morphology function from OpenCV called Opening combined with two sets of thresholding and edge detection functions to make the image cleaner and pixel values more precise.

Testing

Our team conducts frequent tests such that we have a strong proof of concept before we delve deeper into the development process. We conducted preliminary testing in a benchtop environment where we could verify the functionality of all the components before putting them in the water. Additionally we conducted individual water tests of the ping360 sonar, torpedoes, gripper, and marker dropper to verify software for individual components before integrating into the main program. This allowed us to avoid encountering issues when putting the AUV in the water.